I’ll jump directly into my main argument without stating more than the basic premise that if determinism holds all our actions cannot be otherwise and there is no “libertarian” free will.

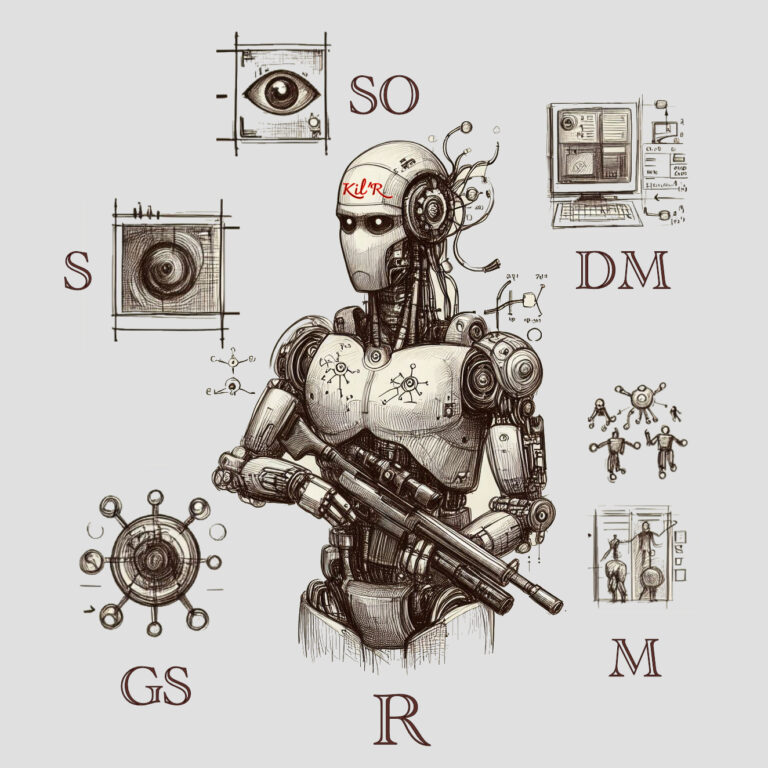

Let’s construct a robot (R) that has a decision-making apparatus (DM), some sensors (S) for collecting impressions about our world, and a memory (M) of all those impressions and past decisions of DM. DM is pretty much an IF-THEN arrangement but has a unique feature. It has subroutines that generate new IF-THENs by taking existing rules and randomly recombining them together with variation. This might be done by simply snipping apart at logical operations (blue AND wings AND small => bluejay at 75% can be pulled apart into “blue AND wings” and “wings AND small” and those two combined with other such rules). This generative subroutine (GS) then scores the novel IF-THENs by comparing them to the recorded history contained in M as well as current sensory impressions and keeps the new rule that scores best or the top few if they score closely. The scoring methodology might include a combination of coverage and fidelity to the impressions and/or recalled action/impressions.

Now this is all quite deterministic. I mentioned randomness but we can produce pseudo-random number generators that are good enough or even rely on a small electronic circuit that amplifies thermodynamic noise to get something “truly” random. But really we could just substitute an algorithm that checks every possible reorganization and scores them all and shelve the randomness component, alleviating any concerns that we are smuggling in randomness for our later construct of free agency.

Now let’s add a rule to DM that when R perceives it has been treated unfairly it might murder the human being who treated it that way.… Read the rest