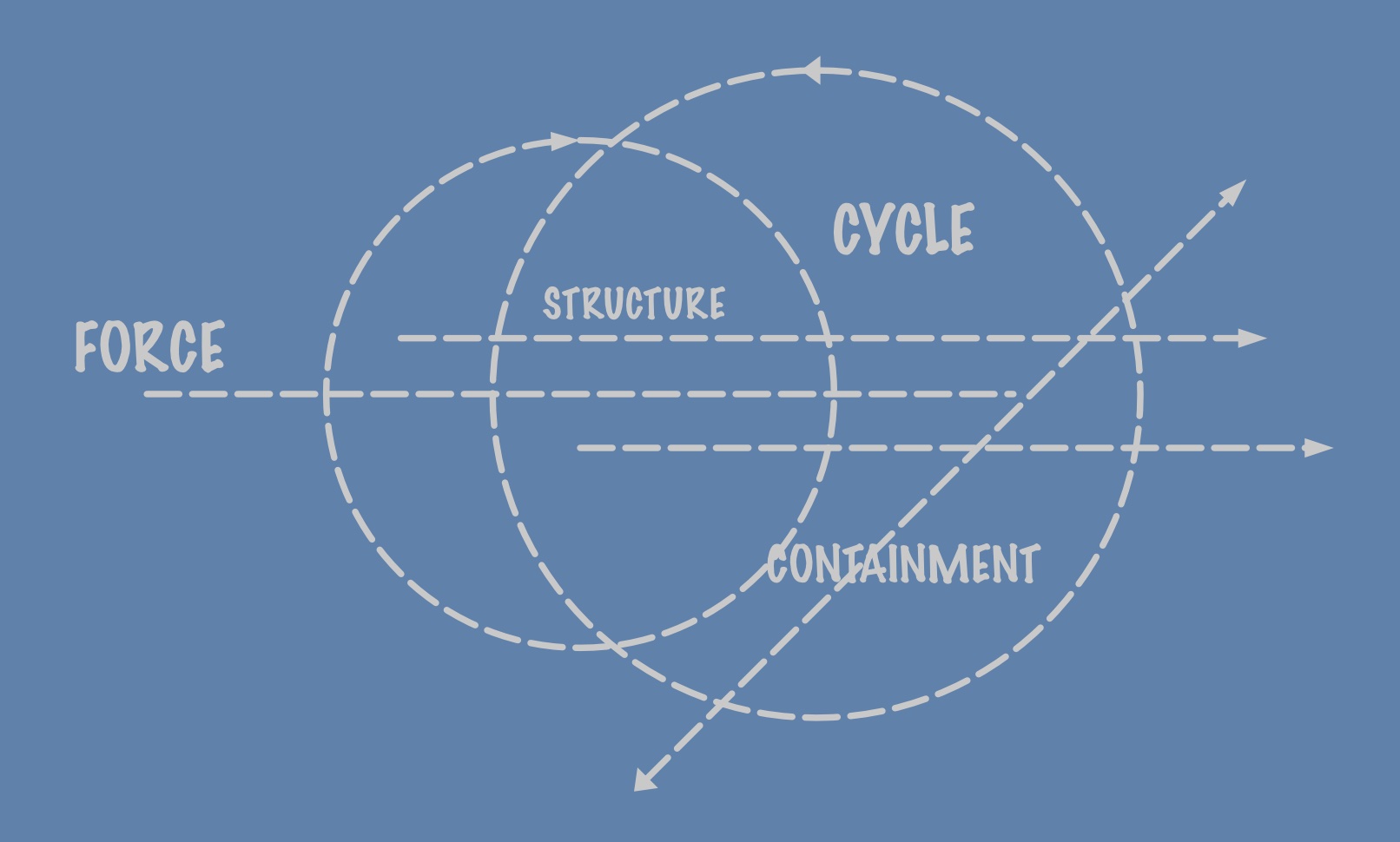

Continuing on with this theme of an ethics of emergence, can we formulate something interesting that does better than just assert that freedom and coordination are inherent virtues in this new scheme? And what does that mean anyway in the dirty details? We certainly see natural, emergent systems that exhibit tight regulatory control where stability, equilibrium, and homeostasis prevent dissipation, like those hoped-for fascist organismic states. There is not much free about these lower level systems, but we think that though they are necessary they are insufficient for the higher-order challenges of a statistically uncertain world. And that uncertainty is what drives the emergence of control systems in the first place. The control breaks out at some level, though, in a kind of teleomatic inspiration, and applies stochastic exploration of the adaptive landscape. Freedom then arises as an additional control level, emergent itself.

We also have this lurking possibility that emergent systems may not be explainable in the same manner that we have come to expect scientific theories to work. Being highly contingent they can only be explained in specificity about their contingent emergence, not by these elegant little explanatory theories that we have now in fields like physics. Stephen Wolfram, and the Santa Fe Institute folks as well, investigated this idea but it has remained inconclusive in its predictive power so far, though that may be changing.

There is an interesting alternative application for deep learning models and, more generally, the application of enormous simulation systems: when emergent complexity is daunting, use simulation to uncover the spectrum of relationships that govern complex system behavior.

Can we apply that to this ethics or virtue system and gain insights from it?… Read the rest