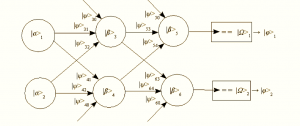

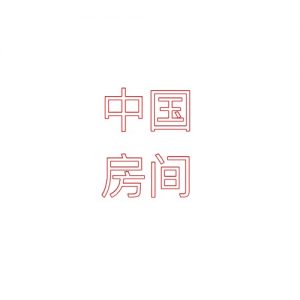

Quanta has a fair round up of recent advances in deep learning. Most interesting is the recent performance on natural language understanding tests that are close to or exceed mean human performance. Inevitably, John Searle’s Chinese Room argument is brought up, though the author of the Quanta article suggests that inferring the Chinese translational rule book from the data itself is slightly different from the original thought experiment. In the Chinese Room there is a person who knows no Chinese but has a collection of translational reference books. She receives texts through a slot and dutifully looks up the translation of the text and passes out the result. “Is this intelligence?” is the question and it serves as a challenge to the Strong AI hypothesis. With statistical machine translation methods (and their alternative mechanistic implementation, deep learning), the rule books have been inferred by looking at translated texts (“parallel” texts as we say in the field). By looking at a large enough corpus of parallel texts, greater coverage of translated variants is achieved as well as some inference of pragmatic issues in translation and corner cases.

Quanta has a fair round up of recent advances in deep learning. Most interesting is the recent performance on natural language understanding tests that are close to or exceed mean human performance. Inevitably, John Searle’s Chinese Room argument is brought up, though the author of the Quanta article suggests that inferring the Chinese translational rule book from the data itself is slightly different from the original thought experiment. In the Chinese Room there is a person who knows no Chinese but has a collection of translational reference books. She receives texts through a slot and dutifully looks up the translation of the text and passes out the result. “Is this intelligence?” is the question and it serves as a challenge to the Strong AI hypothesis. With statistical machine translation methods (and their alternative mechanistic implementation, deep learning), the rule books have been inferred by looking at translated texts (“parallel” texts as we say in the field). By looking at a large enough corpus of parallel texts, greater coverage of translated variants is achieved as well as some inference of pragmatic issues in translation and corner cases.

As a practical matter, it should be noted that modern, professional translators often use translation memory systems that contain idiomatic—or just challenging—phrases that they can reference when translating new texts. The understanding resides in the original translator’s head, we suppose, and in the correct application of the rule to the new text by checking for applicability according to, well, some other criteria that the translator brings to bear on the task.

In the General Language Understand Evaluation (GLUE) tests described in the Quanta article, the systems are inferring how to answer Wh-style queries (who, what, where, when, and how) as well as identify similar texts.… Read the rest