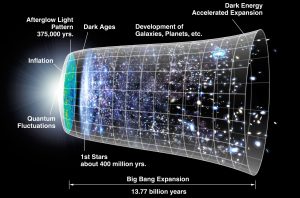

The question of whether we, as people, have free will or not is both abstract and occasionally deeply relevant. We certainly act as if we have something like libertarian free will, and we have built entire systems of justice around this idea, where people are responsible for choices they make that result in harms to others. But that may be somewhat illusory for several reasons. First, if we take a hard deterministic view of the universe as a clockwork-like collection of physical interactions, our wills are just a mindless outcome of a calculation of sorts, driven by a wetware calculator with a state completely determined by molecular history. Second, there has been, until very recently, some experimental evidence that our decision-making occurs before we achieve a conscious realization of the decision itself.

But this latter claim appears to be without merit, as reported in this Atlantic article. Instead, what was previously believed to be signals of brain activity that were related to choice (Bereitschaftspotential) may just be associated with general waves of neural activity. The new experimental evidence puts the timing of action in line with conscious awareness of the decision. More experimental work is needed—as always—but the tentative result suggests a more tightly coupled pairing of conscious awareness with decision making.

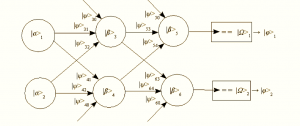

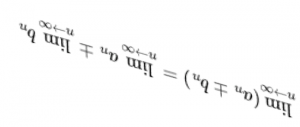

Indeed, the results of this newer experimental result gets closer to my suggested model of how modular systems combined with perceptual and environmental uncertainty can combine to produce what is effectively free will (or at least a functional model for a compatibilist position). Jettisoning the Chaitin-Kolmogorov complexity part of that argument and just focusing on the minimal requirements for decision making in the face of uncertainty, we know we need a thresholding apparatus that fires various responses given a multivariate statistical topology.… Read the rest