The meaning of words and phrases can be a bit hard to pin down. Indeed, the meaning of meaning itself is problematical. I can point to a dictionary and say, well, there is where we keep the meanings of things, but that is just a record of the way in which we use the language. I’m personally fond of a kind of philosophical perspective on this matter of meaning that relies on a form of holism. That is, words and their meanings are defined by our usages of them, our historical interactions with them in different contexts, and subtle distinctive cues that illuminate how words differ and compare. Often, but not always, the words are tied to things in the world, as well, and therefore have a fastness that resists distortions and distinctions.

The meaning of words and phrases can be a bit hard to pin down. Indeed, the meaning of meaning itself is problematical. I can point to a dictionary and say, well, there is where we keep the meanings of things, but that is just a record of the way in which we use the language. I’m personally fond of a kind of philosophical perspective on this matter of meaning that relies on a form of holism. That is, words and their meanings are defined by our usages of them, our historical interactions with them in different contexts, and subtle distinctive cues that illuminate how words differ and compare. Often, but not always, the words are tied to things in the world, as well, and therefore have a fastness that resists distortions and distinctions.

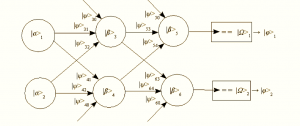

This is, of course, a critical area of inquiry when trying to create intelligent machines that deal with language. How do we imbue the system with meaning, represent it within the machine, and apply it to novel problems that show intelligent behavior? In approaching the problem, we must therefore be achieving some semblance of intelligence in a fairly rigorous way since we are simulating it with logical steps.

The history of philosophical and linguistic interest in these topics is fascinating, ranging from Wittgenstein’s notion of a language game that builds up rules of use to Firth’s expansion to formalization of collocation of words as critical to meaning. In artificial intelligence, this concept of collocation has been expanded further to include interchangeability of contexts. Thus, boat and ship occur in more similar contexts than boat and bank.

A general approach to acquiring these contexts is based on the idea of dimensionality reduction in various forms.… Read the rest