An unexpectedly quick move to Northern Arizona thrust my wife and me into fire and monsoon seasons. The latter term is debatable: monsoons typically involved a radical shift in winds in Southeast Asia. Here the westerlies keep a steady rhythm though the year. The U.S. desert southwest has also adopted the Arabic term “haboob” in recent decades to refer to massive dust storms. If there is a pattern to loanword adoption, it might be a matter of economy. Where a single, unique term can take the place of an elongated description, the loanword wins, even if the nuances of the original get discarded. This continues our child language acquisition tendencies to view different words as being, well, different, even if a strong claim of “one word per meaning” is likely unjustified. We search for replacement terms that provide economy and even relish in the inside knowledge brought by the new lexical entry.

An unexpectedly quick move to Northern Arizona thrust my wife and me into fire and monsoon seasons. The latter term is debatable: monsoons typically involved a radical shift in winds in Southeast Asia. Here the westerlies keep a steady rhythm though the year. The U.S. desert southwest has also adopted the Arabic term “haboob” in recent decades to refer to massive dust storms. If there is a pattern to loanword adoption, it might be a matter of economy. Where a single, unique term can take the place of an elongated description, the loanword wins, even if the nuances of the original get discarded. This continues our child language acquisition tendencies to view different words as being, well, different, even if a strong claim of “one word per meaning” is likely unjustified. We search for replacement terms that provide economy and even relish in the inside knowledge brought by the new lexical entry.

So, as afternoon breaks out into short, heavy downpours we dart in and out of hardware stores getting electrical fishing poles, screw anchors, and #10 8/32nd microbolts to rectify an installation difficulty with a ceiling fan. We meet with contractors and painters who rush through the intermittent squalls. And we break all this up with exploring new restaurants and hitting the local galleries, debating the subterfuge of this or that sculptor in undermining expectations about contemporary trends in southwestern art.

So, as afternoon breaks out into short, heavy downpours we dart in and out of hardware stores getting electrical fishing poles, screw anchors, and #10 8/32nd microbolts to rectify an installation difficulty with a ceiling fan. We meet with contractors and painters who rush through the intermittent squalls. And we break all this up with exploring new restaurants and hitting the local galleries, debating the subterfuge of this or that sculptor in undermining expectations about contemporary trends in southwestern art.

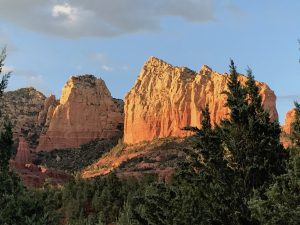

But there is a stability to the forest and canyon around our new house. Deer wander through, but less so as the rain has filled the red rock canyons with watering holes, allowing them to avoid long sojourns to Oak Creek for water. A bobcat nestled for half a morning on our lower deck overlooking the canyon, quietly scanning for prey.… Read the rest