Artificial Neural Networks (ANNs) were, from early on in their formulation as Threshold Logic Units (TLUs) or Perceptrons, mostly focused on non-sequential decision-making tasks. With the invention of back-propagation training methods, the application to static presentations of data became somewhat fixed as a methodology. During the 90s Support Vector Machines became the rage and then Random Forests and other ensemble approaches held significant mindshare. ANNs receded into the distance as a quaint, historical approach that was fairly computationally expensive and opaque when compared to the other methods.

Artificial Neural Networks (ANNs) were, from early on in their formulation as Threshold Logic Units (TLUs) or Perceptrons, mostly focused on non-sequential decision-making tasks. With the invention of back-propagation training methods, the application to static presentations of data became somewhat fixed as a methodology. During the 90s Support Vector Machines became the rage and then Random Forests and other ensemble approaches held significant mindshare. ANNs receded into the distance as a quaint, historical approach that was fairly computationally expensive and opaque when compared to the other methods.

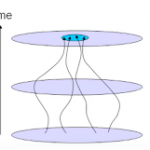

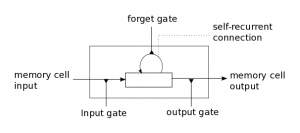

But Deep Learning has brought the ANN back through a combination of improvements, both minor and major. The most important enhancements include pre-training of the networks as auto-encoders prior to pursuing error-based training using back-propagation or Contrastive Divergence with Gibbs Sampling. The critical other enhancement derives from Schmidhuber and others work in the 90s on managing temporal presentations to ANNs so the can effectively process sequences of signals. This latter development is critical for processing speech, written language, grammar, changes in video state, etc. Back-propagation without some form of recurrent network structure or memory management washes out the error signal that is needed for adjusting the weights of the networks. And it should be noted that increased compute fire-power using GPUs and custom chips has accelerated training performance enough that experimental cycles are within the range of doable.

Note that these are what might be called “computer science” issues rather than “brain science” issues. Researchers are drawing rough analogies between some observed properties of real neuronal systems (neurons fire and connect together) but then are pursuing a more abstract question as to how a very simple computational model of such neural networks can learn.… Read the rest