My wife got a Jeep Wrangler Unlimited Rubicon a few days back. It has necessitated a new education in off-road machinery like locking axles, low 4, and disconnectable sway bars. It seemed the right choice for our reinsertion into New Mexico, a land that was only partially accessible by cheap, whatever-you-can-afford, vehicles twenty years ago when we were grad students. So we had to start driving random off-road locations and found Faulkner’s Canyon in the Robledos. Billy the Kid used this area as a refuge at one point and we searched out his hidey-hole this morning but ran out of LTE coverage and couldn’t confirm the specific site until returning from our adventure. We will try another day!

My wife got a Jeep Wrangler Unlimited Rubicon a few days back. It has necessitated a new education in off-road machinery like locking axles, low 4, and disconnectable sway bars. It seemed the right choice for our reinsertion into New Mexico, a land that was only partially accessible by cheap, whatever-you-can-afford, vehicles twenty years ago when we were grad students. So we had to start driving random off-road locations and found Faulkner’s Canyon in the Robledos. Billy the Kid used this area as a refuge at one point and we searched out his hidey-hole this morning but ran out of LTE coverage and couldn’t confirm the specific site until returning from our adventure. We will try another day!

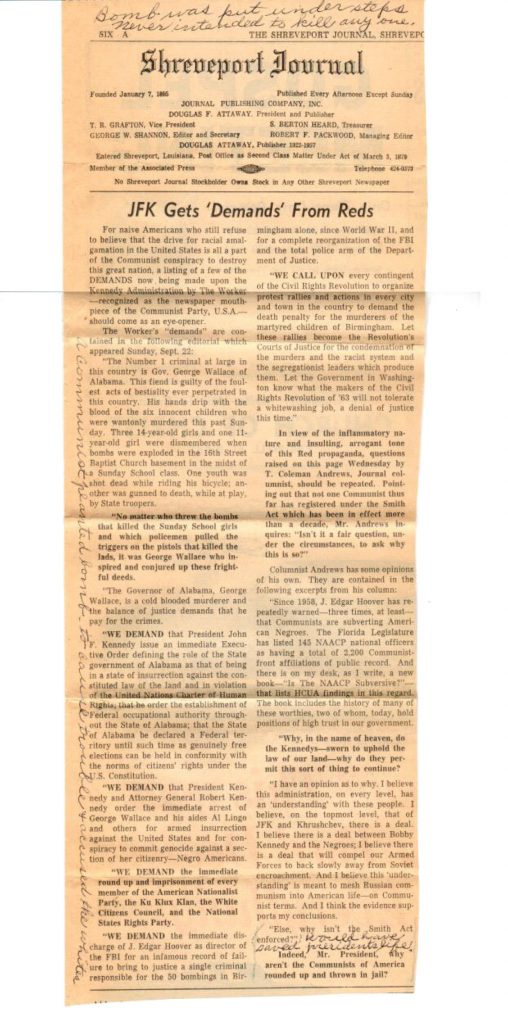

Billy the Kid was, of course, a killer of questionable moral standing.

With the Neil Gorsuch nomination to SCOTUS, his role in the legal and moral philosophies surrounding assisted suicide has come under scrutiny. In everyday discussions, the topic often centers on the notion of dignity for the dying. Indeed, the autonomy of the person (and with it some assumption of rational choice) combines with a consideration of alternatives to the human-induced death based on pain, discomfort, loss of physical or mental faculties, and also the future-looking speculation about these possibilities.

Now I combined legal and moral in the same sentence because that is also one way to consider the way in which law is or ought to be formulated. But, in fact, one can also claim that the two don’t need to overlap; law can exist simply as a system of rules that does not include moral repercussions and, if the two have a similar effect on behavior, it is merely a happenstance.… Read the rest