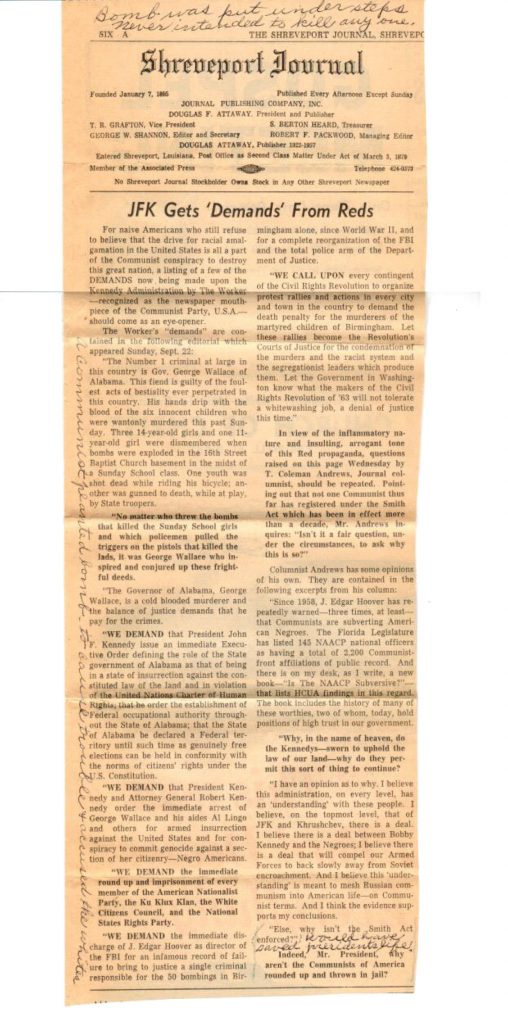

In the modern American political climate, I’m constantly finding myself at sea in trying to unravel the motivations and thought processes of the Republican Party. The best summation I can arrive at involves the obvious manipulation of the electorate—but that is not terrifically new—combined with a persistent avoidance of evidence and facts.

In the modern American political climate, I’m constantly finding myself at sea in trying to unravel the motivations and thought processes of the Republican Party. The best summation I can arrive at involves the obvious manipulation of the electorate—but that is not terrifically new—combined with a persistent avoidance of evidence and facts.

In my day job, I research a range of topics trying to get enough of a grasp on what we do and do not know such that I can form a plan that innovates from the known facts towards the unknown. Here are a few recent investigations:

- What is the state of thinking about the origins of logic? Logical rules form into broad classes that range from the uncontroversial (modus tollens, propositional logic, predicate calculus) to the speculative (multivalued and fuzzy logic, or quantum logic, for instance). In most cases we make an assumption based on linguistic convention that they are true and then demonstrate their extension, despite the observation that they are tautological. Synthetic knowledge has no similar limitations but is assumed to be girded by the logical basics.

- What were the early Christian heresies, how did they arise, and what was their influence? Marcion of Sinope is perhaps the most interesting one of these, in parallel with the Gnostics, asserting that the cruel tribal god of the Old Testament was distinct from the New Testament Father, and proclaiming perhaps (see various discussions) a docetic Jesus figure. The leading “mythicists” like Robert Price are invaluable in this analysis (ignore first 15 minutes of nonsense). The thin braid of early Christian history and the constant humanity that arises in morphing the faith before settling down after Nicaea (well, and then after Martin Luther) reminds us that abstractions and faith have a remarkable persistence in the face of cultural change.