Jürgen Schmidhuber’s work on algorithmic information theory and curiosity is worth a few takes, if not more, for the researcher has done something that is both flawed and rather brilliant at the same time. The flaws emerge when we start to look deeply into the motivations for ideas like beauty (is symmetry and noncomplex encoding enough to explain sexual attraction? Well-understood evolutionary psychology is probably a better bet), but the core of his argument is worth considering.

Jürgen Schmidhuber’s work on algorithmic information theory and curiosity is worth a few takes, if not more, for the researcher has done something that is both flawed and rather brilliant at the same time. The flaws emerge when we start to look deeply into the motivations for ideas like beauty (is symmetry and noncomplex encoding enough to explain sexual attraction? Well-understood evolutionary psychology is probably a better bet), but the core of his argument is worth considering.

If induction is an essential component of learning (and we might suppose it is for argument’s sake), then why continue to examine different parameterizations of possible models for induction? Why be creative about how to explain things, like we expect and even idolize of scientists?

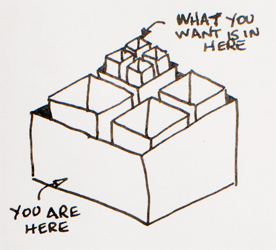

So let us assume that induction is explained by the compression of patterns into better and better models using an information theoretic-style approach. Given this, Schmidhuber makes the startling leap that better compression and better models are best achieved by information harvesting behavior that involves finding novelty in the environment. Thus curiosity. Thus the implementation of action in support of ideas.

I proposed a similar model to explain aesthetic preferences for mid-ordered complex systems of notes, brush-strokes, etc. around 1994, but Schmidhuber’s approach has the benefit of not just characterizing the limitations and properties of aesthetic systems, but also justifying them. We find interest because we are programmed to find novelty, and we are programmed to find novelty because we want to optimize our predictive apparatus. The best optimization is actively seeking along the contours of the perceivable (and quantifiable) universe, and isolating the unknown patterns to improve our current model.… Read the rest

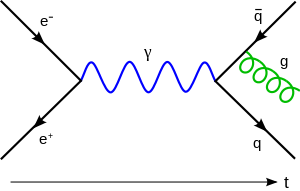

The central theme in Signals and Noise is that of the inverse problem and its consequences: given an ocean of data, how does one uncover the true signals hidden in the noise? Is there even such a thing? There’s an obsessive balance between apophenia and modeling somewhere built into our skulls.

The central theme in Signals and Noise is that of the inverse problem and its consequences: given an ocean of data, how does one uncover the true signals hidden in the noise? Is there even such a thing? There’s an obsessive balance between apophenia and modeling somewhere built into our skulls.