How exactly deep learning models do what they do is at least elusive. Take image recognition as a task. We know that there are decision-making criteria inferred by the hidden layers of the networks. In Convolutional Neural Networks (CNNs), we have further knowledge that locally-receptive fields (or their simulated equivalent) provide a collection of filters that emphasize image features in different ways, from edge detection to rotation-invariant reductions prior to being subjected to a learned categorizer. Yet, the dividing lines between a chair and a small loveseat, or between two faces, is hidden within some non-linear equation composed of these field representations with weights tuned by exemplar presentation.

This elusiveness was at least part of the reason that neural networks and, generally, machine learning-based approaches have had a complicated position in AI research; if you can’t explain how they work, or even fairly characterize their failure modes, maybe we should work harder to understand the support for those decision criteria rather than just build black boxes to execute them?

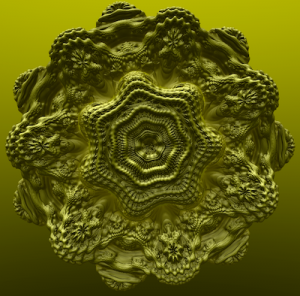

So when groups use deep learning to produce visual artworks like the recently auctioned work sold by Christie’s for USD 432K, we can be reassured that the murky issue of aesthetics in art appreciation is at least paired with elusiveness in the production machine.

Or is it?

Let’s take Wittgenstein’s ideas about aesthetics as a perhaps slightly murky point of comparison. In Wittgenstein, we are almost always looking at what are effectively games played between and among people. In language, the rules are shared in a culture, a community, and even between individuals. These are semantic limits, dialogue considerations, standardized usages, linguistic pragmatics, expectations, allusions, and much more.… Read the rest