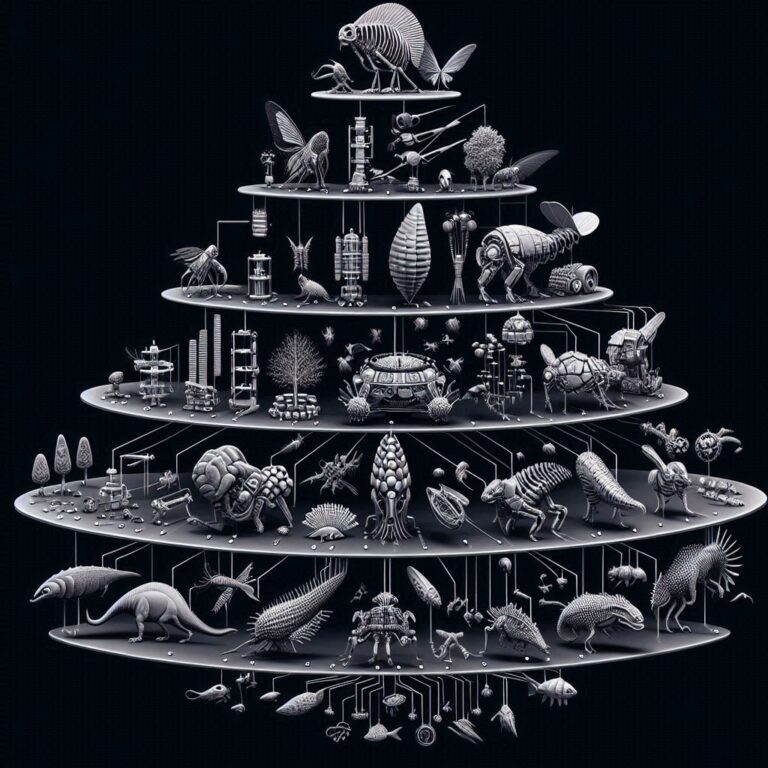

I have a longstanding interest in the concept of emergence as a way of explaining a wide range of human ideas and the natural world. We have this incredible algorithm of evolutionary change that creates novel life forms. We have, according to mainstream materialist accounts of philosophy of mind, a consciousness that may have a unique ontology (what really exists) of subjective experiencers and qualia and intentionality, but that is also somehow emergent from the meat of the brain (or supervenes or is an epiphenomenon, etc. etc.) That emergence may be weak or strong in various accounts, with strong meaning something like the idea that a new thing is added to the ontology while weak meaning something like we just don’t know enough yet to find the reduction of the concept to its underlying causal components. If we did, then it is not really something new in this grammar of ontological necessity.

There is also the problem of computational irreducibility (CI) that has been championed by Wolfram. In CI, there are classes of computations that result in outcomes that cannot be predicted by any simpler algorithm. This seems to open the door to a strong concept of emergence: we have to run the machine to get the outcome; there is no possibility (in theory!) of reducing the outcome to any lesser approximation. I’ve brought this up as a defeater of the Simulation Hypothesis, suggesting that the complexity of a simulation is irreducible from the universe as we see it (assuming perfect coherence in the limit).

There is also a dual to this idea in algorithmic information theory (AIT) that is worth exploring. In AIT, it is uncomputable to find the shortest Turing Machine capable of accepting a given symbol sequence.… Read the rest

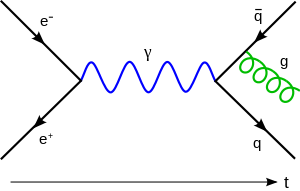

The simulation hypothesis is perhaps a bit more interesting than how to add clusters of neural network nodes to do a simple reference resolution task, but it is also less testable. This is the nature of big questions since they would otherwise have been resolved by now. Nevertheless, some theory and experimental analysis has been undertaken for the question of whether or not we are living in a simulation, all based on an assumption that the strangeness of quantum and relativistic realities might be a result of limited computing power in the grand simulator machine. For instance, in a virtual reality game, only the walls that you, as a player, can see need to be calculated and rendered. The other walls that are out of sight exist only as a virtual map in the computer’s memory or persisted to longer-term storage. Likewise, the behavior of virtual microscopic phenomena need not be calculated insofar as the macroscopic results can be rendered, like the fire patterns in a virtual torch.

The simulation hypothesis is perhaps a bit more interesting than how to add clusters of neural network nodes to do a simple reference resolution task, but it is also less testable. This is the nature of big questions since they would otherwise have been resolved by now. Nevertheless, some theory and experimental analysis has been undertaken for the question of whether or not we are living in a simulation, all based on an assumption that the strangeness of quantum and relativistic realities might be a result of limited computing power in the grand simulator machine. For instance, in a virtual reality game, only the walls that you, as a player, can see need to be calculated and rendered. The other walls that are out of sight exist only as a virtual map in the computer’s memory or persisted to longer-term storage. Likewise, the behavior of virtual microscopic phenomena need not be calculated insofar as the macroscopic results can be rendered, like the fire patterns in a virtual torch.