On The Thinking Atheist, C.J. Werleman promotes the idea that atheists can’t be Republicans based on his new book. Why? Well, for C.J. it’s because the current Republican platform is not grounded in any kind of factual reality. Supply-side economics, Libertarianism, economic stimuli vs. inflation, Iraqi WMDs, Laffer curves, climate change denial—all are grease for the wheels of a fantastical alternative reality where macho small businessmen lift all boats with their steely gaze, the earth is forever resilient to our plunder, and simple truths trump obscurantist science. Watch out for the reality-based community!

On The Thinking Atheist, C.J. Werleman promotes the idea that atheists can’t be Republicans based on his new book. Why? Well, for C.J. it’s because the current Republican platform is not grounded in any kind of factual reality. Supply-side economics, Libertarianism, economic stimuli vs. inflation, Iraqi WMDs, Laffer curves, climate change denial—all are grease for the wheels of a fantastical alternative reality where macho small businessmen lift all boats with their steely gaze, the earth is forever resilient to our plunder, and simple truths trump obscurantist science. Watch out for the reality-based community!

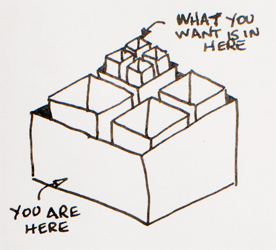

Is politics essentially religion in that it depends on ideology not grounded in reality, spearheaded by ideologues who serve as priests for building policy frameworks?

Likely. But we don’t really seem to base our daily interactions on rationality either. 538 Science tells us that it has taken decades to arrive at the conclusion that vitamin supplements are probably of little use to those of us lucky enough to live in the developed world. Before that we latched onto indirect signaling about vitamin C, E, D, B12, and others to decide how to proceed. The thinking typically took on familiar patterns: someone heard or read that vitamin X is good for us/I’m skeptical/why not?/maybe there are negative side-effects/it’s expensive anyway/forget it. The language games are at all levels in promoting, doubting, processing, and reinforcing the microclaims for each option. We embrace signals about differences and nuances but it often takes many months and collections of those signals in order to make up our minds. And then we change them again.

Among the well educated, I’ve variously heard the wildest claims about the effectiveness of chiropractors, pseudoscientific remedies, the role of immunizations in autism (not due to preservatives in this instance; due to immune responses themselves), and how karma works in software development practice.… Read the rest

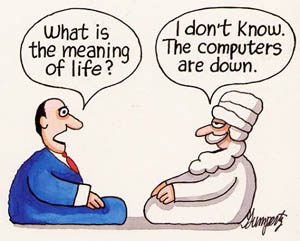

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “