I have a longstanding interest in the concept of emergence as a way of explaining a wide range of human ideas and the natural world. We have this incredible algorithm of evolutionary change that creates novel life forms. We have, according to mainstream materialist accounts of philosophy of mind, a consciousness that may have a unique ontology (what really exists) of subjective experiencers and qualia and intentionality, but that is also somehow emergent from the meat of the brain (or supervenes or is an epiphenomenon, etc. etc.) That emergence may be weak or strong in various accounts, with strong meaning something like the idea that a new thing is added to the ontology while weak meaning something like we just don’t know enough yet to find the reduction of the concept to its underlying causal components. If we did, then it is not really something new in this grammar of ontological necessity.

There is also the problem of computational irreducibility (CI) that has been championed by Wolfram. In CI, there are classes of computations that result in outcomes that cannot be predicted by any simpler algorithm. This seems to open the door to a strong concept of emergence: we have to run the machine to get the outcome; there is no possibility (in theory!) of reducing the outcome to any lesser approximation. I’ve brought this up as a defeater of the Simulation Hypothesis, suggesting that the complexity of a simulation is irreducible from the universe as we see it (assuming perfect coherence in the limit).

There is also a dual to this idea in algorithmic information theory (AIT) that is worth exploring. In AIT, it is uncomputable to find the shortest Turing Machine capable of accepting a given symbol sequence. This is really also a way of restating the Halting Problem, but as an AIT search problem it has interesting implications since we know that the shorter Turing Machines are also the least likely to misrepresent the extension of the symbol sequence given some kind of hidden logic internal to the sequence. A way to think about this in less abstract terms is that given some measurements of an environment, the best predictive model for those measurements is among the shorter logic machines that “explain” or reproduce them. This is a bit of a restatement of Occam’s razor, of course. In this case, a perfectly random symbol sequence is not reducible to a smaller machine and neither is a strongly emergent system that parallels Wolfram’s CI.

But is this correct?

Hamed Tabatabaei Ghomi at Cambridge University sees problems with the formulation in his 2022 paper, “Setting the Demons Loose: Computational Irreducibility Does Not Guarantee Unpredictability or Emergence.” He focuses on using CI as a basis for claiming any kind of ontological emergence. The problem is that it only applies to all possible algorithms run over infinite time. You can see that easily in my AIT formulation. It is uncomputable to create a general algorithm that finds the shortest possible Turing Machine for any arbitrary symbol sequence. The italicized general and any are critical. We can certainly devise clever shortcuts and heuristic algorithms that get us close to the shortest one. These approximate the underlying symbol logic. There are all kinds of these hacks and shortcuts in the real world of algorithms and they reduce the time complexity of the so-called “brute force” solution to something that is more quickly computable.

Likewise, in the CI system built on cellular automata, Ghomi points out that “course-graining” is a method for transforming the automaton rules into sketches that are somewhat predictable, sort of like using larger volumes in a weather model to allow for quicker, less-reliable prediction because some of the physical properties at finer granularities can be neglected to achieve a degree of accuracy.

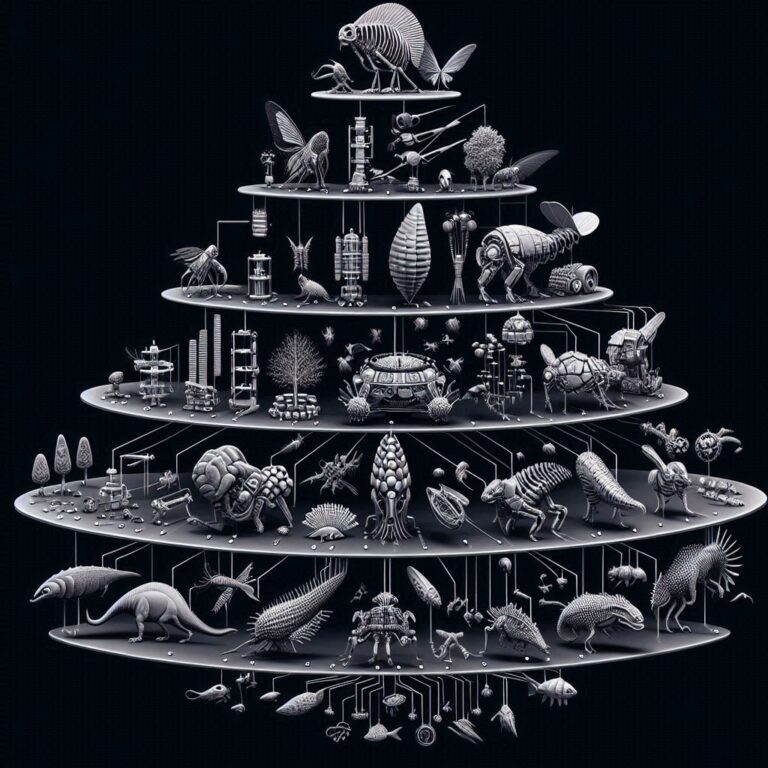

But getting a better understanding of this apparent gradation from weak to strong and computational to ontological emergence requires formulating some kind of way of capturing the underlying structure of the algorithm choices. How reducible are these different classes of algorithms and explanatory models? Back to the AIT dual, we need a search algorithm that is general enough to look through collections of machines for shorter variants. In fact, since we know that recursivity is the basic property that distinguishes grammars and Turing Machines from other state machines, and that recursivity can often be represented by modular algorithmic constructs, we should look for a search algorithm that preserves and re-uses contingent partial solutions through some kind of memoization and tries novel combinations by building Frankensteins out of all the available parts.

And there it is, the algorithm of evolution acting on the state space of possible solutions and compiling together incomplete but operational machines. It’s weak emergence certainly in the taxonomy philosophers apply to these areas, but it is a general search approach for weakly emergent to near-strong emergent systems. That may be enough.

Minor edits