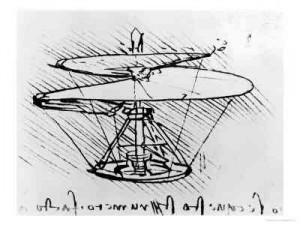

At 38,000 feet somewhere above Missouri, returning from a one day trip to Washington D.C., it is easy to take Nick Bostrom’s point that bird flight is not the end-all of what is possible for airborne objects and mechanical contrivances like airplanes in his paper, How Hard is Artificial Intelligence? Evolutionary Arguments and Selection Effects. His efforts to try to bound and distinguish the evolution of intelligence as either Hard or Not-Hard runs up against significant barriers, however. As a practitioner of the art, finding similarities between a purely physical phenomena like flying and something as complex as human intelligence falls flat for me.

At 38,000 feet somewhere above Missouri, returning from a one day trip to Washington D.C., it is easy to take Nick Bostrom’s point that bird flight is not the end-all of what is possible for airborne objects and mechanical contrivances like airplanes in his paper, How Hard is Artificial Intelligence? Evolutionary Arguments and Selection Effects. His efforts to try to bound and distinguish the evolution of intelligence as either Hard or Not-Hard runs up against significant barriers, however. As a practitioner of the art, finding similarities between a purely physical phenomena like flying and something as complex as human intelligence falls flat for me.

But Bostrom is not taking flying as more than a starting point for arguing that there is an engineer-able possibility for intelligence. And that possibility might be bounded by a number of current and foreseeable limitations, not least of which is that computer simulations of evolution require a certain amount of computing power and representational detail in order to be a sufficient simulation. His conclusion is that we may need as much as another 100 years of improvements in computing technology just to get to a point where we might succeed at a massive-scale evolutionary simulation (I’ll leave to the reader to investigate his additional arguments concerning convergent evolution and observer selection effects).

Bostrom dismisses as pessimistic the assumption that a sufficient simulation would, in fact, require a highly detailed emulation of some significant portion of the real environment and the history of organism-environment interactions:

… Read the restA skeptic might insist that an abstract environment would be inadequate for the evolution of general intelligence, believing instead that the virtual environment would need to closely resemble the actual biological environment in which our ancestors evolved … However, such extreme pessimism seems unlikely to be well founded; it seems unlikely that the best environment for evolving intelligence is one that mimics nature as closely as possible.

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “