I’ll jump directly into my main argument without stating more than the basic premise that if determinism holds all our actions cannot be otherwise and there is no “libertarian” free will.

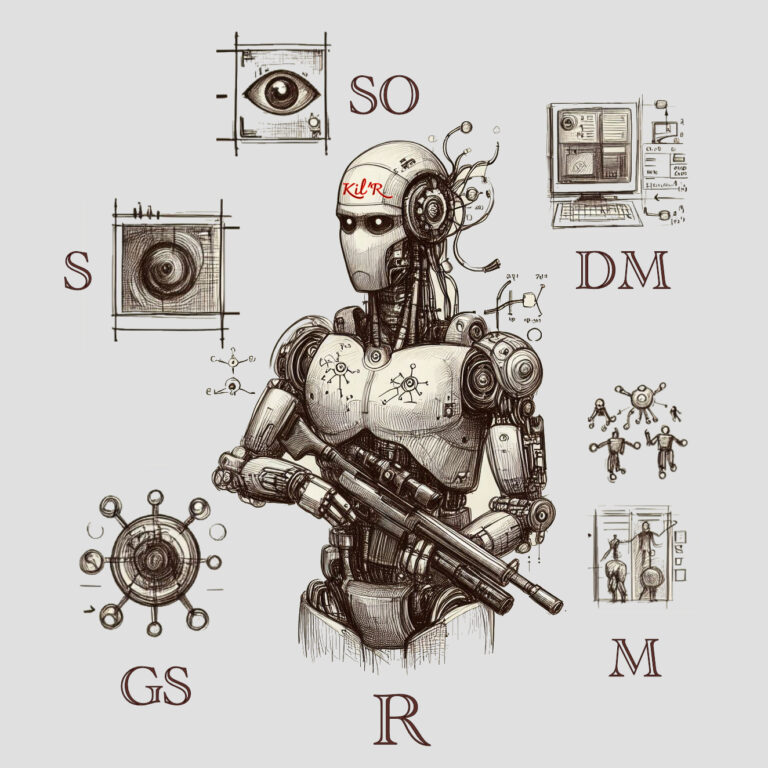

Let’s construct a robot (R) that has a decision-making apparatus (DM), some sensors (S) for collecting impressions about our world, and a memory (M) of all those impressions and past decisions of DM. DM is pretty much an IF-THEN arrangement but has a unique feature. It has subroutines that generate new IF-THENs by taking existing rules and randomly recombining them together with variation. This might be done by simply snipping apart at logical operations (blue AND wings AND small => bluejay at 75% can be pulled apart into “blue AND wings” and “wings AND small” and those two combined with other such rules). This generative subroutine (GS) then scores the novel IF-THENs by comparing them to the recorded history contained in M as well as current sensory impressions and keeps the new rule that scores best or the top few if they score closely. The scoring methodology might include a combination of coverage and fidelity to the impressions and/or recalled action/impressions.

Now this is all quite deterministic. I mentioned randomness but we can produce pseudo-random number generators that are good enough or even rely on a small electronic circuit that amplifies thermodynamic noise to get something “truly” random. But really we could just substitute an algorithm that checks every possible reorganization and scores them all and shelve the randomness component, alleviating any concerns that we are smuggling in randomness for our later construct of free agency.

Now let’s add a rule to DM that when R perceives it has been treated unfairly it might murder the human being who treated it that way. The rule for murder is something like when a collection of perceptions exceeds a threshold value in terms of scores, R proceeds to murder the person. But the sensors are pretty unreliable and there are already a bunch of rules for dealing with that and attaching various probabilities to the incoming perception stream. Being wronged might arise from sensing a human grimace, for instance, but we have a great many rules about human grimaces and what their causal reasons might be in DM. Computing out all those rules results in a range of uncertainty about whether the grimace constitutes a wrong. And we keep building new candidate rules around grimaces that move the computations around on the threshold scale for murder.

Now R sees a second nasty look from the human being and suddenly R is over the threshold according to the DM. The rule fires to begin the murder action. While R is walking over on gangly robot legs, the rules reorganize slightly and the threshold gets suddenly pushed down. The reorganization in this case was a perception that the human being is reading a book and the nasty look may be in reaction to what the person is reading. There is a chance that the grimace and look are unrelated to shading R and a new rule is generated that has better coverage of the intersection of all the facts. The murder score drops precipitously. But it might also just be a routine reorganization of the rules without an environmental signal; because different starting points can result in different outcomes, it is useful to search for better constructs with more fidelity/coverage from time to time. Or the reorganization can be initiated by embedded rules triggering, for instance, when uncertainty is too high, or some other criterion is reached like the moral weight of such an impactful decision on another entity. This is the equivalent of just rethinking about a decision.

We can, without harm, put a subjective observer (SO) subsystem into R. All the sensor impressions, the mass of rules in DM, M, and the GS subsystem for novel rule generation pass through SO and it maintains a short-term buffer for holding the current state of affairs. To make it “subjective” we add some rules to DM that attach weights and various qualia symbols to the items passing through SO. So a grimace observation is both an impression and has attached descriptive symbols (IS-NASTY, IS-MEAN, IM-SEEING-RED) that are relevant primarily to SO. Since the attached qualia symbols feed through the DM, they in turn influence the decision process. The observer influences the outcome.

And there we have it: R can do otherwise given a certain situation of moral significance. The reorganization algorithm has some small randomness to it but we are not ascribing free agency to the randomness per se. Instead it is the conjoining, scoring, and rescoring of rules that allows for a choice to be made. SO watches it all go down, all the schemes and hopes and passing creative thoughts. The new rules settle and a choice is complete.

Everything, R and environment, are moving along very deterministically, but the perception and control of the environment by R is mostly computationally irreducible in terms of next states. Many things might happen—not anything might happen, but many things—and so the thresholds are being adjusted within R and the uncertainty bands that surround thresholds for action along with the complex of subroutines for handling escalations get modified into increasingly sophisticated algorithms that take uncertain impressions and counterproposals to cope with that uncertainty. SO feels it and those feelings help reorganize the models.

I think we get to Wolfram’s suggestion that computational irreducibility leads to people’s belief in free will, but we also get to a realization that decisions are, in fact, truly up to us if we are something like this kind of robot. An emergent algorithm, in engaging with uncertainty, can reorganize to produce a range of otherwises. If we run the clock for a given situation forward again, the uncertainty present in the environment is sufficient that different rules changes and decisions can in fact be different—even leaving out the random number generator. Local optima also invariably arise in these reorganization schemes and reorganizing more than once with different walks through the state space of rule combinations can lead to better rules subsystems, leading to some kind of internal narrative that R realizes it hasn’t thought of things that way, or hadn’t gone down that path, or needs to look at the problem with fresh eyes (S).

And now, having solved free will, I need to decide what I want for dinner.

Added rethinking note to offset any impression that an environmental signal is necessary for DM reorganization.

Edit: justifying => stating in first sentence

One more add-on about moral weight and self-triggering to emphasize the notion of self-agency.