The best paper I’ve read so far this year has to be Pseudo-Mathematics and Financial Charlatanism: The Effects of Backtest Overfitting on Out-of-sample Performance by David Bailey, Jonathan Borwein, Marcos López de Prado, and Qiji Jim Zhu. The title should ring alarm bells with anyone who has ever puzzled over the disclaimers made by mutual funds or investment strategists that “past performance is not a guarantee of future performance.” No, but we have nothing but that past performance to judge the fund or firm on; we could just pick based on vague investment “philosophies” like the heroizing profiles in Kiplingers seem to promote or trust that all the arbitraging has squeezed the markets into perfect equilibria and therefore just use index funds.

The best paper I’ve read so far this year has to be Pseudo-Mathematics and Financial Charlatanism: The Effects of Backtest Overfitting on Out-of-sample Performance by David Bailey, Jonathan Borwein, Marcos López de Prado, and Qiji Jim Zhu. The title should ring alarm bells with anyone who has ever puzzled over the disclaimers made by mutual funds or investment strategists that “past performance is not a guarantee of future performance.” No, but we have nothing but that past performance to judge the fund or firm on; we could just pick based on vague investment “philosophies” like the heroizing profiles in Kiplingers seem to promote or trust that all the arbitraging has squeezed the markets into perfect equilibria and therefore just use index funds.

The paper’s core tenets extend well beyond financial charlatanism, however. They point out that the same problem arises in drug discovery where main effects of novel compounds may be due to pure randomness in the sample population in a way that is masked by the sample selection procedure. The history of mental illness research has similar failures, with the head of NIMH remarking that clinical trials and the DSM for treating psychiatric symptoms is too often “shooting in the dark.”

The core suggestion of the paper is remarkably simple, however: use held-out data to validate models. Remarkably simple but apparently rarely done in quantitative financial analysis. The researchers show how simple random walks can look like a seasonal price pattern, and how by sending binary signals about market performance to clients (market will rise/market will fall) investment advisors can create a subpopulation that thinks they are geniuses as other clients walk away due to losses. These rise to the level of charlatanism but the problem of overfitting is just one of pseudo-mathematics where insufficient care is used in managing the data. And the fix is simple: do what we do in machine learning. Divide the training data into 10 buckets, train on 9 of them and verify on the last one. Then rotate or do another division/training cycle. Anomalies in the data start popping out very quickly and ensemble-based methods can help to cope with breakdowns of independence assumptions and stability.

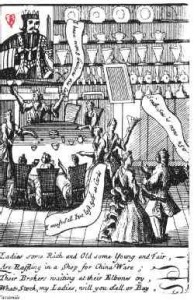

Perhaps the best quote in the paper is from Sir Isaac Newton who lamented that he could not calculate “the madness of people” after losing a minor fortune in the South Sea Bubble of 1720. If we might start to compute that madness it is important to do it right.