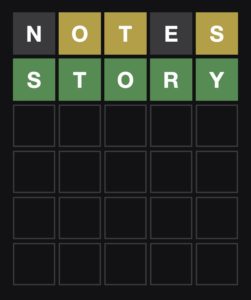

I occasionally do Wordles at the New York Times. If you are not familiar, the game is very simple. You have six chances to guess a five-letter word. When you make a guess, letters that are in the correct position turn green. Letters that are in the word but in the wrong position turn yellow. The mental process for solving them is best optimized by choosing a word initially that has high-frequency English letters, like “notes,” and then proceeding from there. At some point in the guessing process, one is confronted with anchoring known letters and trying to remember words that might fit the sequence. There is a handy virtual keyboard displayed below the word matrix that shows you the letters in black, yellow, green, and gray that you have tried, that are required, that are fit to position, and that remain untested, respectively. After a bit, you start to apply little algorithms and exclusionary rules to the process: What if I anchor an S at the beginning? There are no five-letter words that end in “yi” in English, etc. There is a feeling of working through these mental strategies and even a feeling of green and yellow as signposts along the way.

I decided this morning to write the simplest one-line Wordle helper I could and solved the puzzle in two guesses:

Sorry for the spoiler if you haven’t gotten to it yet! Here’s what I needed to do the job: a five letter word list for English and a word frequency list for English. I could have derived the first from the second but found the first first, here. The second required I log into Kaggle to get a good CSV searchable list. Next, I entered in “NOTES” as a fairly good high-frequency English letter seed and constructed a bash loop that used a grep statement that excluded words from the five-letter list that had any of the letters in the given positions but did contain O, T, and S, and then grepped through the frequency list for candidates:

% for WORD in `grep "[^n][^o][^t][^e][^s]" sgb-words.txt | grep ".*[o].*" | grep ".*[t].*" | grep ".*[s].*" | grep -v ".*[e].*" | grep -v ".*[n].*"`; do; grep "^$WORD," unigram_freq.csv; done;

And we get:

story,138433809 short,106755242 stood,11116275 storm,22242164 stock,176295589 shoot,12695618 shout,3965861 ghost,14490042 sport,62683851 frost,5940685 stool,3031945 scout,6176157 stout,1939514 spout,905624 stoop,360440 sloth,519428 stork,728360 stomp,1168038 scoot,269666 gusto,512932 stoic,272080 ascot,888745 strop,63137 stoat,49468 stoma,122408

Note that if I had properly sorted the list by frequency by doing a % sort -nr -k 2 -t , I would have found “stock” to be the best choice, but I didn’t notice that “story” was inferior to “stock” in corpus frequency, so I went with “story” and got the prize.

But, fun as that might be and remembering all my regular expression rules and bash shell syntax, I liked the symmetry of using regex for building simulations of mental processes. Note that this method has a certain perfection to it in that a person’s recollections about good candidate words has only a mild experiential feeling about corpus frequencies. Since we assume that the words are chosen randomly and not by some devious scheme (“larva” the other day may test that theory), using letter and word frequency choosing schemes are the optimal strategy for solving Wordle (arguably, if they are chosen randomly from a five-letter word list, then letter frequency within that list, not corpus frequency, would be the optimal strategy).

Regular expressions express regular languages that are at the bottom of the chain of formal languages. They can be accepted by simple machines called finite-state automata. Context-free languages are next up and are accepted by pushdown automata, and then up through context-sensitive languages (linear-bound automata), and recursively-enumerable languages (Turing machines). Since Turing machines can compute all recursive functions, they serve as a placeholder for what we consider logical machines capable of almost all computations. Really, the only thing they can’t compute are things that have some kind of exponential growth patterns in their computations. In that case, they can compute them but they can’t halt.

But what of my experiences of green and yellow and the feeling of trying to find candidates when using my wetware to solve Wordles? Philosophers like David Chalmers developed the so-called “Hard Problem of Consciousness” to explain the difficulties we have in explaining our conscious experiences. At its core, the problem boils down to whether we can imagine ways that machinery can have experiences like green or yellow. “Imagine” is perhaps too loose a word to apply here for philosophers do have arguments about what can and can not be imagined. And, indeed, simply brushing the problem aside also requires structured imagination that the problem can and will be resolved by the science of the mind, rather than by philosophical argumentation and speculation. Still, there are good reasons to believe that the problem is tractable by science. For one, it is a question that has to do with biological systems (brains) as compared with, say, a question like “What is the meaning of life?” And we have a good track record for understanding the mechanisms of biology. It may also simply be an error in that we are using folk terminology to describe subjective experience. P. M. S. Hacker thinks the Hard Problem is nonsense because we are using language badly. So, “What is it like to be a bat?” or “What is it like to be me?” are just mystifying expressions (“deepenings” as some call them) since there is nothing else I can in fact be. If you ask me the contents of my qualitative experience, there is no doubt about that content. It can’t be otherwise. I can have doubts about its relationship to the world or past experiences, but the content itself is unquestionably my experience.

And that leads us to a broader question about whether philosophy brings value to these edge questions. In the previous post, for instance, and in the video, the issue of whether or not there can be an actual infinity arises. In other words, when we think about the ordering of things, and we also think about the mathematics of infinity using Cantor’s formulation, are there logical inconsistencies that arise with speculating (again, imagining) that the universe might be infinitely old? This is the lead-in on the question of the validity of the Kalam, and it is the worst part of the video in that there is no empirical reality or evidence for infinities associated with the universe or things within it. It brings us no value. The question of causality does have empirical ways of anchoring the semantics of causality and they are not identical to neatly dividing the world into contingent and non-contingent things. That is imaginative definition without merit.

So the Hard Problem of Philosophy comes down to where it has relevance and there are strong reasons to think that virtue, society, and ethics are where it may have gage (to borrow an archaic term from Hacker). The encroachments on science are merely discursive until we have data.

Fixed misspelling

additional notes and cleanup