Deep Learning now dominates discussions of intelligent systems in Silicon Valley. Jeff Dean’s discussion of its role in the Alphabet product lines and initiatives shows the dominance of the methodology. Pushing the limits of what Artificial Neural Networks have been able to do has been driven by certain algorithmic enhancements and the ability to process weight training algorithms at much higher speeds and over much larger data sets. Google even developed specialized hardware to assist.

Deep Learning now dominates discussions of intelligent systems in Silicon Valley. Jeff Dean’s discussion of its role in the Alphabet product lines and initiatives shows the dominance of the methodology. Pushing the limits of what Artificial Neural Networks have been able to do has been driven by certain algorithmic enhancements and the ability to process weight training algorithms at much higher speeds and over much larger data sets. Google even developed specialized hardware to assist.

Broadly, though, we see mostly pattern recognition problems like image classification and automatic speech recognition being impacted by these advances. Natural language parsing has also recently had some improvements from Fernando Pereira’s team. The incremental improvements using these methods should not be minimized but, at the same time, the methods don’t emulate key aspects of what we observe in human cognition. For instance, the networks train incrementally and lack the kinds of rapid transitions that we observe in human learning and thinking.

In a strong sense, the models that Deep Learning uses can be considered Behaviorist in that they rely almost exclusively on feature presentation with a reward signal. The internal details of how modularity or specialization arise within the network layers are interesting but secondary to the broad use of back-propagation or Gibb’s sampling combined with autoencoding. This is a critique that goes back to the early days of connectionism, of course, and why it was somewhat sidelined after an initial heyday in the late eighties. Then came statistical NLP, then came hybrid methods, then a resurgence of corpus methods, all the while with image processing getting more and more into the hand-crafted modular space.

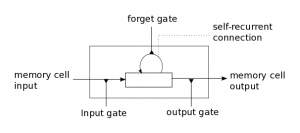

But we can see some interesting developments that start to stir more Cognitivism into this stew. Recurrent Neural Networks provided interesting temporal behavior that might be lacking in some feedforward NNs, and Long-Short-Term Memory (LSTM) NNs help to overcome some specific limitations of recurrent NNs like the disconnection between temporally-distant signals and the reward patterns.

Still, the modularity and rapid learning transitions elude us. While these methods are enhancing the ability to learn the contexts around specific events (and even the unique variability of contexts), that learning still requires many exposures to get right. We might consider our language or vision modules to be learned over evolutionary history and so not expect learning within a lifetime from scratch to result in similarly structured modules, but the differences remain not merely quantitative but significantly qualitative. A New Cognitivism requires more work to rise from this New Behaviorism.